A how to on starting using hugo as the framework for a static webpage and using GCS to host the content.

Setup DNS your DNS to point to the Google Cloud storage api

- Create CNAME

blog.example.compointing toc.storage.googleapis.com

- Create CNAME

Create GCS bucket

blog.example.comyou will be asked to prove that you are the owner of the tldexample.comor the subdomainblog.example.com- You can verify via a TXT record for the tld of a URL for the subdomain

- See https://cloud.google.com/storage/docs/domain-name-verification verification instructions

Get Hugo for your OS from gohugo.io, check out the quick start documentation https://gohugo.io/getting-started/quick-start/

Generate the content

hugothis will create all you need in the./public/directory.You can upload the content of

./publicto the GCS bucket or setup deployment config and let hugo do it for you withhugo deploy(Optional) If you have the Google SDK kit set up https://cloud.google.com/sdk/docs/install you can configure hugo to deploy the content to the GSC bucket from your local host, setup the deployment target in the hugo config file and run

hugo deploy

[[deployment.targets]]

# An arbitrary name for this target.

name = "blog.example.com"

# The Go Cloud Development Kit URL to deploy to. Examples:

# GCS; see https://gocloud.dev/howto/blob/#gcs

URL = "gs://blog.example.com"

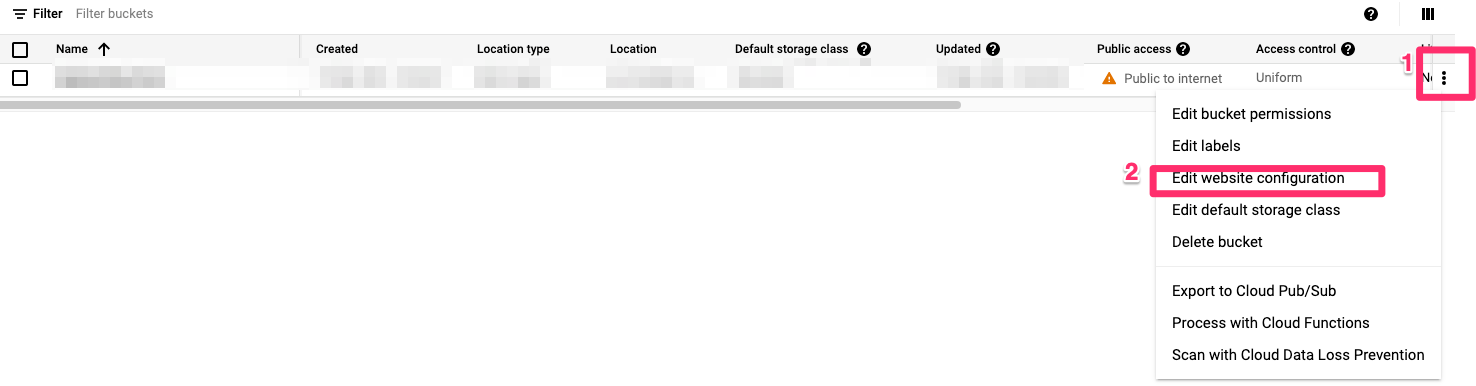

- Once uploaded set up the website settings of the GCS bucket,

index.htmland a 404 page

Bonus: example 404 page layout, save it in layouts/404.html

404.html

{{ define "main"}}

<main id="main">

<div>

<h1 id="title"><a href="{{ "/" | relURL }}">Go home.</a></h1>

</div>

</main>

{{ end }}

- (Optional) Setup github actions workflow to deploy the blog and its content

- Create a service account in GCP for use with Github Actions

- Setup a custom role to allow the SA enough permissions on the GCS bucket and add the SA with the new role to the bucket, do not add the role/permission on the project level

- The following permissions seem to be sufficient, hugo needs to be able to control and update the objects on the bucket

- Create a service account in GCP for use with Github Actions

storage.objects.create

storage.objects.delete

storage.objects.get

storage.objects.list

storage.objects.update

- Store the base64 encoded service account key as a Github Actions secret

- Example Github Actions workflow as reference:

name: DeployBlog

on:

# Triggers the workflow on push or pull request events but only for the master branch

pull_request:

branches: [master]

# trigger on closed PR

types:

- closed

# Allows you to run this workflow manually from the Actions tab

workflow_dispatch:

jobs:

build-and-deploy:

# trigger only if the merge was successful, merged_by will be null if PR was closed without merging

if: github.event.pull_request.merged_by != ''

runs-on: ubuntu-latest

steps:

# Checks-out your repository under $GITHUB_WORKSPACE, so your job can access it

- uses: actions/checkout@v2

with:

submodules: recursive # Fetch the theme

fetch-depth: 0 # Fetch all history for .GitInfo and .Lastmod

# Login to gcloud and export credentials

- name: Login

uses: google-github-actions/setup-gcloud@master

with:

project_id: ${{ secrets.GCP_PROJECT_ID }}

service_account_key: ${{ secrets.GCP_CREDENTIALS }}

export_default_credentials: true

- name: Hugo setup

uses: peaceiris/[email protected]

with:

hugo-version: "latest"

- name: Build

run: hugo --minify

- name: Deploy

run: hugo deploy --target="blog.example.com"